Q&A: The evolving workflows of VR creation and production

Weekly insights on the technology, production and business decisions shaping media and broadcast. Free to access. Independent coverage. Unsubscribe anytime.

As part of our Focus on Virtual coverage, we recently had a chance to speak with John Fragomeni, a creative and entertainment media executive, and Andy Cochrane, an immersive creator and director, about their most recent virtual reality production along with the changes currently impacting VR production.

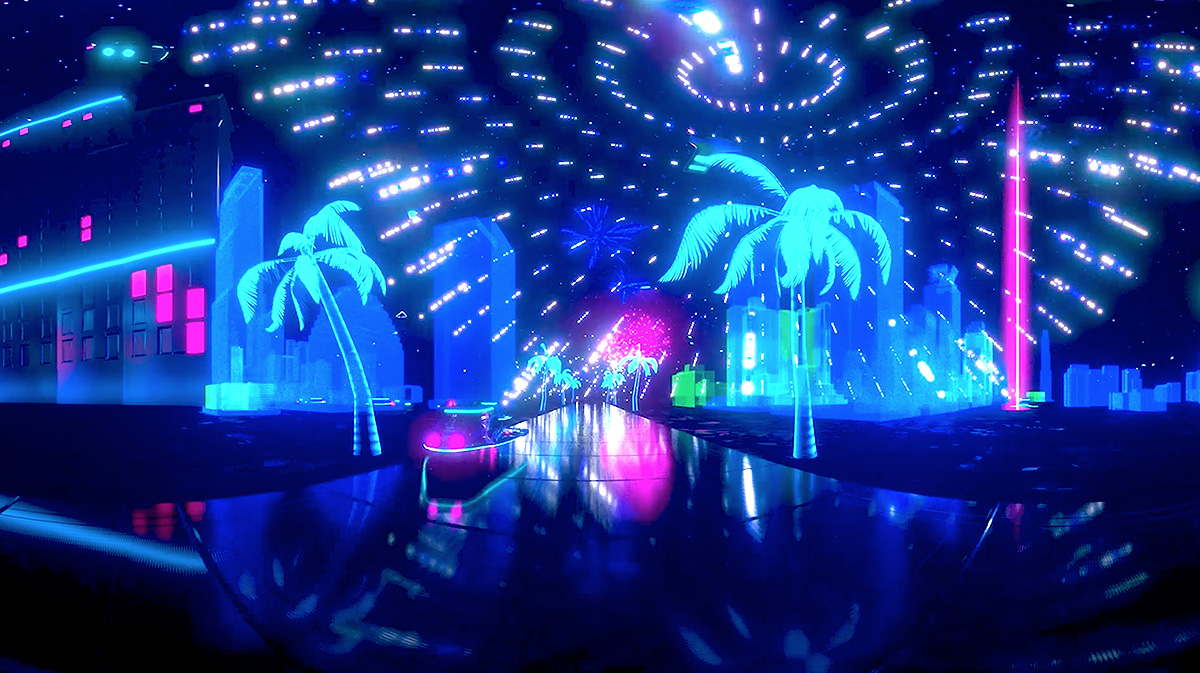

R+R Partners contracted Fragomeni and Cochrane to produce five unique virtual reality (VR) experiences and worked closely with the team at Sunset West Media in the creative development and crafting of this amazing VR journey. “Vegas: Alter Your Reality” was produced for the Las Vegas Convention and Visitors Association (LVCVA) for the annual Art Week and Art Basel festival in Miami from December 4 – 10. The piece of work is art in VR and interprets Las Vegas as a virtual experience through the minds and eyes of five artists – Adhemas Batista, Beeple, Fafi, Insa and Signal Noise.

Fragomeni and Cochrane have been at the forefront of VR production since 2013, and have more than 20 years experience in live action, design, animation, post-production and high-end visual effects. Fragomeni has extensive experience in film, TV, music videos and commercials, including feature film work on “Pacific Rim,” “Terminator Salvation” and “Pirates of the Caribbean.” Cochrane has directed top immersive experiences for Google, Intel RealSense and USA Today.

There are many ways to capture VR footage, but what post-production solutions?

In many ways, 360º video post-production is just like traditional post, just with extra and custom tools and workflows sprinkled in to work in 360º. Most spherical videos are currently edited in Premiere due to its native support of VR video and their acquisition of Mette’s Skybox plugins for converting 2D assets into 360º and adding transitions and effects that work properly on this kind of footage. On the VFX side, all 3D software already works for VR, but requires special render settings to output a correct 360º image. While the amount of work involved in 360º CG is increased due to the camera rendering everything in every direction, the basic workflows are the same. For compositing, there are native tools for correctly working with VR video in After Effects and Fusion, with a plugin available for Nuke to do the same.

What about workflows, how do they differ in a VR world?

The single hardest aspect to 360º video production is review. Outside of the headset, it is impossible to judge anything – the VR POV affects time, scale, distance, even color and blocking. Very few software solutions on the market now offer good headset integration, so it often requires a little bit of guesswork and then a render and a review to make any decisions.

Outside of this time-consuming hurdle, there are a lot of roadblocks that you encounter with each piece of software having different levels of support for VR, requiring awkward round trips and workarounds to get the images rendered and manipulated correctly. A good example of this is color correction – none of the established grading tools supports VR, so we have to develop methods for getting the footage in and out without creating seams or other issues. Another big hangup is mono vs. stereo 360º, where many programs do not have the ability to work with stereo properly yet, and the ones that do support it offer a somewhat primitive level of control.

Where are you seeing the biggest VR push right now in terms of content?

Live action 360º video and VR games are the two biggest types of content being produced currently, but we are seeing a very significant interest in location-based VR in the form of activations, installations, and arcades. While there is far less reach in terms of total users, location-based VR allows a much higher level of technical sophistication and hardware integration, so these experiences offer a much more exciting level of immersion. As the consumer hardware matures and expands there will be a much larger market for this type of higher-end experience, in many ways echoing the 1980’s move of video games from the arcade to the home.

How is VR impacting animated content?

The biggest secret in VR right now is that the highest quality image you can create is 360º CG. Live action requires a lot of work to make seams go away and to correct for issues related to small low-quality cameras on capture. Real-time rendering requires 90+FPS with full interactivity, so the visuals must always be tempered against the need to maintain framerate in the face of 2k+ stereo rendering of all manner of other effects such as particles and other animations, not to mention networking and audio and other processes.

With 3D packages such as Cinema4D, we can animate, create environments, light, render, create effects, basically everything we would ever want to do and just hit render to get perfect omni-directional stereo images. VR allows us to create CG that is fully immersive – it is a new way to experience animation –a more personal and intimate experience than traditional mediums have been able to offer audiences. Once more people realize how powerful this opportunity is, I think we are going to see an explosion of animated content in VR.

What is the biggest mistake you’re seeing right now in VR creation?

Ignoring the viewer. In a headset, the audience feels like they are there, right in the middle of the action; for them to be completely ignored by all of the characters and for their POV to be moved around callously with little regard to where they might want to go is 360º video crime #1. VR requires a new type of narrative, one in which the viewer is at least acknowledged if not ideally directly involved. The vast majority of immersive video is just a “placed camera” where some action was filmed or rendered with a 360º camera, with no thought or care put into how it will make the viewer feel, or what they might want to see.

What is the best tip for creating VR content right now?

Review everything in the headset, always. Establish a rendering and review pipeline before almost anything else, and run every frame through it. If you are not watching in the headset, you cannot possibly judge the experience you are crafting, and your instincts as a viewer will guide you more than any experience you may have in creating traditional content.

Can you describe a VR creation project that illustrates your work in this area? What was unique about this project? Can you talk about the turnaround time and any specific software and hardware tools that were particularly useful in meeting the VR content creation challenges?

Our most recent project just premiered at Art Basel in Miami – we created art animations in 360º in partnership with 5 different fine artists for a traveling installation for the city of Las Vegas. Each immersive video is a direct translation of the artist’s work into VR, with assets that they created converted into 3D by our team then animated and rendered into omni directional stereo. The artists all come from more traditional art forms such as illustration, murals, graffiti, and graphic design, so we pulled together a team of experts in CG animation to work directly with each artist to convert and create assets that would work in 3D but match their 2D style.

We worked to develop the narratives and to storyboard each experience for several months, then guided the artists through an asset creation phase where they made individual images and style frames for our team to use as the basis for our 3D work. The core team worked for 4 months, our primary tool was Cinema 4D for animation, environment creation, lighting, and Octane for rendering. We used After Effects and Fusion to composite, and we used the Skybox plugins to create some elements in After Effects and to make our WIP edits in Premiere so we could check progress in the headsets. The team was relatively small given the sheer volume of work – we generated over 2,000,000 frames in the process of creating the final 12.5mins of 4k stereo animation.

tags

360, 360 Degree Video, Adobe After Effects, After Effects, Blackmagic Design, Blackmagic Design Fusion, Cinema 4D, John Fragomeni, maxon, maxon cinema 4d, vr

categories

Augmented Reality, Virtual Production and Virtual Sets, Broadcast Design, Content Delivery and Storage, Exclusives, Executive Q&A, Featured, Virtual Sets