The future of broadcast graphics is here: Behind the scenes of Zero Density’s NAB demos

Subscribe to NCS for the latest news, project case studies and product announcements in broadcast technology, creative design and engineering delivered to your inbox.

The real-time graphics technology leader showcased photorealistic live augmented reality graphics and demoed a state-of-the-art virtual studio production

From gripping sports to smarter weather, the future of live broadcasts is set to be more spectacular than ever before, thanks to next-generation graphics. Zero Density proved as much with their NAB booth this year, which featured an augmented reality (AR) demo stage, alongside a state-of-the-art virtual studio. The booth gave attendees insight into the real-time graphics technologies powering daily shows, live events, and more from the world’s biggest broadcasters.

The possibilities of a virtual studio

Zero Density demonstrations utilized the virtual set provided by The Weather Channel (TWC) and designed by creative trailblazer Myreze. TWC used the set is now used for daily live forecasts powered by Zero Density’s Reality suite and proves that enormous virtual environments can be created on a relatively small green screen.

Reality Engine 4.27 was at work during the virtual studio demo. The real-time node-based compositor — which can key, render, and composite photoreal graphics — has been natively built to take advantage of Unreal Engine features that can make virtual studio graphics more photoreal. This virtual studio was raytraced, showcasing just how cinematic live graphics can be. The demo ran on full performance and maximum stability thanks to RealityEngine AMPERE hardware, while Reality Keyer was used to key and keep valuable details of contact shadows, transparent objects, and sub-pixel details like hair in any shot.

An insight into augmented reality

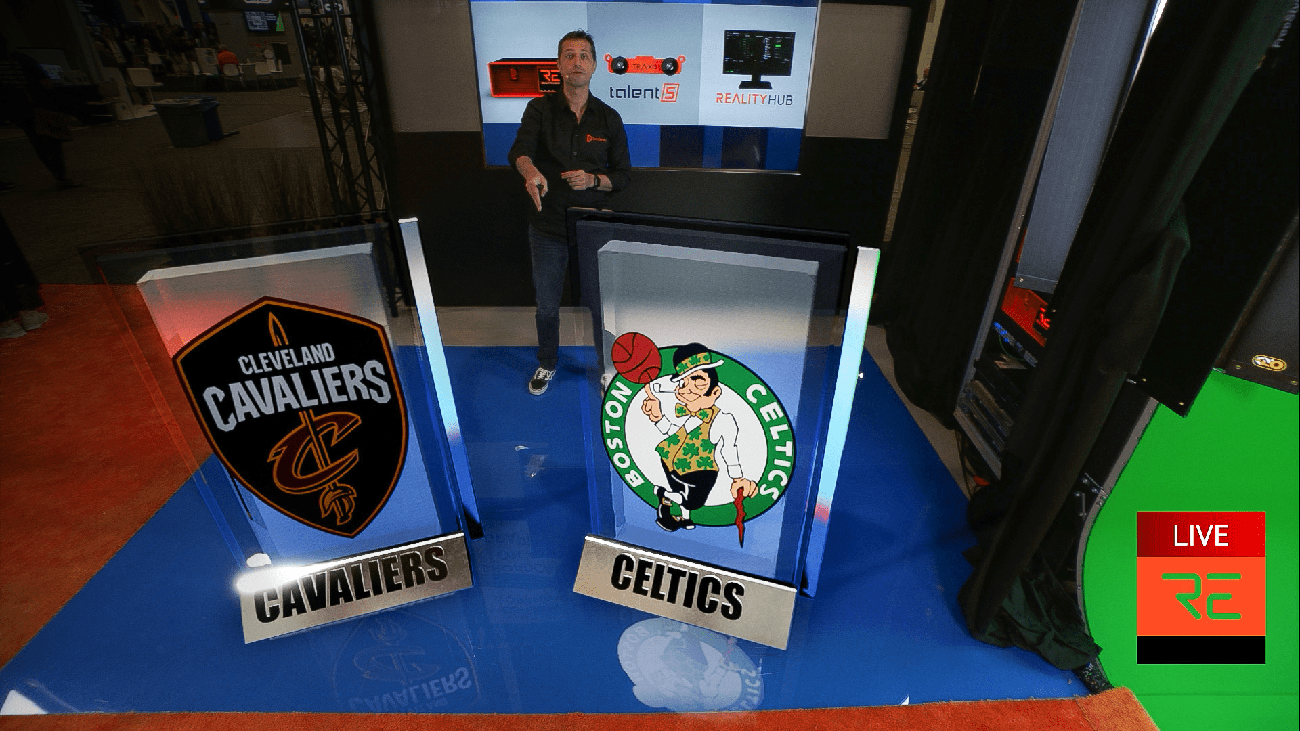

At another demo, the team revealed how photorealistic augmented reality graphics can help broadcasters enhance everything from viewer experience to storytelling and data visualization. This demo showcased a sports broadcast with AR elements that conventional fill-and-key blending methods simply couldn’t reproduce.

A unique feature to Reality Engine is that compositing graphics with various blending modes similar to Photoshop is possible, but in real-time. You can add bloom effect additively blended over the lower third or apply blur effect on the background of the lower third on the incoming video signal.

The presenter was able to cast his reflections automatically onto the AR graphics and had the ultimate freedom of movement on the physical set, interacting with the graphics and never once overlapping them thanks to the TRAXIS talentS system.

RealityHub 1.2 kept the software ecosystem connected from a single hub, feeding the AR graphics with real-time data throughout the demo. Any source could be used to feed into the graphics, including Excel files or a live data feed, without using any plug-ins or writing a single line of code. RealityHub was also used to manage and control broadcast on-air graphics such as tickers, lower thirds, split windows, and channel branding.

Previewing RealityHub 1.3

The future of real-time for broadcast looks very bright indeed, NAB Show attendees got further proof of this when RealityHub 1.3 was previewed in the Zero Density demo pods. This new version introduces numerous capabilities such as new flexibility and control mechanisms for users, including an updated Form Builder and advanced user management. With it, it’s now going to be easier than ever for broadcasters to incorporate real-time graphics into their productions. New features of RealityHub’s newsroom workflow was previewed with its integration with Octopus NRCS.

The above content is sponsor-generated content from Zero Density. To learn more about sponsor-generated content, click here.

Subscribe to NCS for the latest news, project case studies and product announcements in broadcast technology, creative design and engineering delivered to your inbox.

tags

Zero Density, Zero Density TRAXIS

categories

Partner Content