Q&A: Ncam aims to improve AR usage via accurate camera tracking

Subscribe to NCS for the latest news, project case studies and product announcements in broadcast technology, creative design and engineering delivered to your inbox.

Ncam has been at the forefront of depth-based camera tracking for augmented and virtual reality production.

Recently, we had a chance to speak with CEO Nic Hatch about some of the companies solutions including the newly launched Real Light — which aims to captures real-world lighting effects in virtual graphics.

Discuss the current augmented reality camera tracking options?

Ncam offers a complete and customizable augmented reality platform that enables, in real time, the creation of photorealistic virtual graphics for set extensions, virtual environments, and pre-visualisation for film and TV productions.

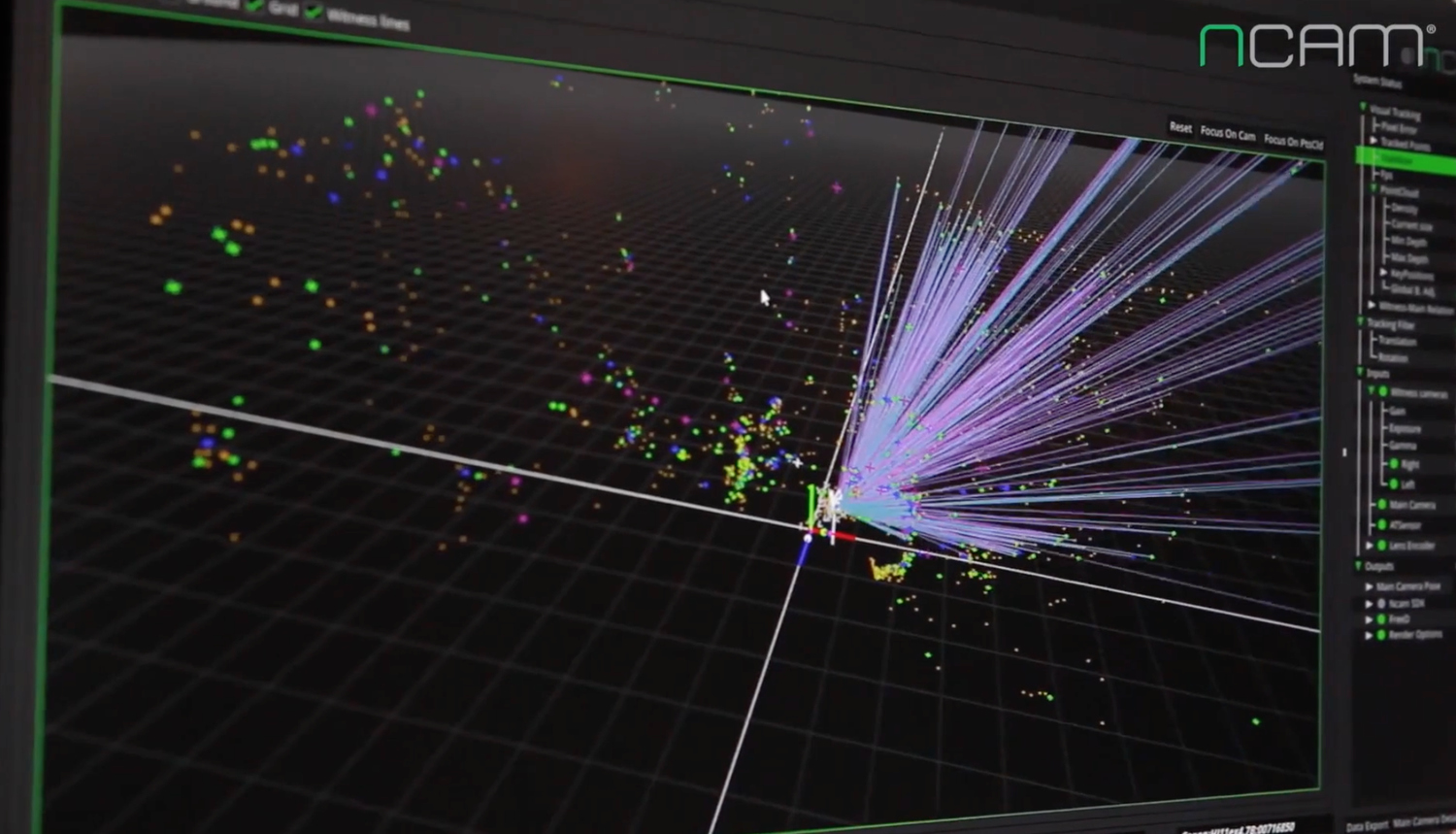

Ncam Reality is our unique marker-less camera tracking solution that tracks natural features in the environment, building up an understanding of the 3D space in front of the camera. The system is available in three configurations for broadcast environments:

Ncam Reality PTZ is best placed for pan, tilt and zoom essential broadcast setups. Ncam Reality Studio can be utilized for indoor/studio broadcast and real-time product visualization and augmented reality. And Ncam Reality Event has been developed for episodic, broadcast, large events, real-time product visualization and augmented reality.

What sets the Ncam system apart?

Our unique marker-less camera tracking solutions are automated, continuously active and accurate. As a result, there is no need for time-consuming system calibration, roof marker setup, or teaching of environment or surveys.

The system can be mounted to any camera, lens and rig including Steadicam, handheld, pedestal, jibs, cranes and aerial camera systems, and the ultrafast setup and instant tracking means an initialized track within one second.

There are no environment limitations when using Ncam Reality; it is not restricted to working within a volume or calibrated space, and works indoors and outdoors freely. The system’s multi-sensor hybrid tracking technology understands 3D space, and Ncam Reality provides real-time complete positional tracking data (XYZ translations and rotations – 6 Degrees of Freedom) and lens information (F,I,Z) including distortions.

The solution’s tiered product approach enables an easy and unique software-based upgrade path. As a predominantly software-based solution, it is future-proofed with regular, substantial and demonstrable improvements in core product capabilities and functionality. For example, Real Depth and Extreme – two major product developments – will be added in this way.

What systems or platforms does Ncam support?

Ncam is committed to enabling our technology to seamlessly integrate with third-party applications. As such, we collaborate with many technology vendors.

As mentioned above, Ncam Reality can be used on any camera or lens, including handheld and Steadicam. The system also integrates with all rendering engines via industry standard protocols, or via the Ncam Software Development Kit.

Ncam currently integrates with all real-time broadcast engines including Avid, Brainstorm, ChyronHego, Ross, Ventuz, Vizrt and others.

Ncam has also developed a popular UE4 plug-in for Epic Games’ Unreal Engine. UE4 is a toolset which harnesses elite games engine rendering performance, enabling unsurpassed realism.

In addition, we have just introduced a new integration between Vizrt’s real-time engine, Viz Engine, and the UE4 plug-in for the latest version of Unreal Engine 4.20. This new architecture enables Vizrt customers to combine template-based graphics and texts with Unreal Engine real-world graphic environments, to create superior looking graphics and enhanced storytelling within live production.

Ncam Reality also supports Unity and Motion Builder through its own proprietary plugins.

What are the advantages of depth-based camera tracking?

Depth-based camera tracking is taking realism in AR broadcast productions to a new level.

Audiences are more sophisticated than ever and expect to see virtual environments blended seamlessly with the real world – if they don’t, viewers will notice the discrepancies. We developed Real Depth to address this challenge. The system provides a ground-breaking new and unique automated technique for sensing depth. Used with a green screen, Real Depth extracts depth data in real time to allow subjects, such as a news presenter, to interact seamlessly with their virtual surroundings for immersive and synergetic visual engagement.

What’s the most overlooked part of AR implementation?

Content. AR is still in a relatively nascent stage for broadcast and much (though not all) of its use to date is gimmicky. AR graphics need to be seen as an integral part of the program content rather than tacked on to show off a new graphics toy, and they need to be fully immersed in the real-world environment so that the audience can’t tell it’s not real.

What’s next for enhancing realism in AR? Assuming lighting?

The quality of graphics content in broadcast is making significant steps forward by utilizing games engine technology. However, the graphics will never look truly integrated into their real-world environment if the lighting does not match, therefore breaking the illusion that the AR is real. The capabilities of games engines will enable this realism, but only if they are supplied the correct lighting data.

How does Ncam’s Real Light work to solve lighting challenges?

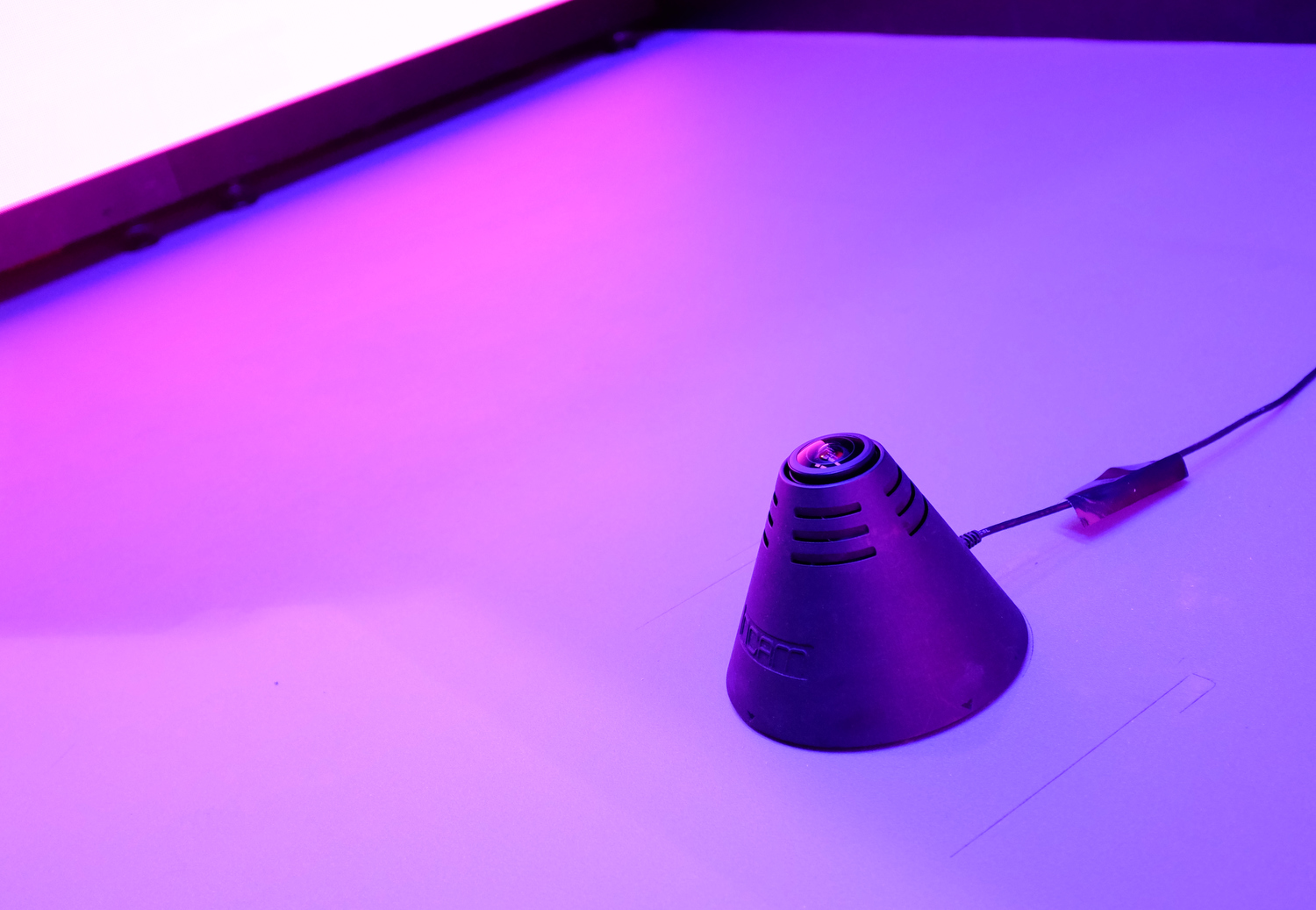

Real Light solves the common challenge of making virtual graphics look like they are integrated into the real-world scene, capturing important elements of real-world lighting (direction, color, intensity etc), and rendering those elements onto the virtual graphics in real-time. Real Light creates the right shadows and allows objects to react ‘naturally’, adapting to every lighting change.

This is deployed via a probe-based approach, which reads the environment’s lighting state. Real Light measures and models the light in a scene, allowing virtual elements to cast shadows on actual objects and to respond to lighting changes based on the surrounding environment in real time.

The first iteration of Real Light will include all the hardware to integrate a live studio environment and CG elements, and will be rendered within our Unreal Engine integration in real time.

Subscribe to NCS for the latest news, project case studies and product announcements in broadcast technology, creative design and engineering delivered to your inbox.

tags

Augmented Reality, Augmented Reality for Broadcast, Camera Tracking, Ncam, sports augmented reality

categories

Augmented Reality, Virtual Production and Virtual Sets, Executive Q&A, Featured, Studio Technology, Voices