Industry Insights: Camera tracking moving towards integrated workflow

Subscribe to NCS for the latest news, project case studies and product announcements in broadcast technology, creative design and engineering delivered to your inbox.

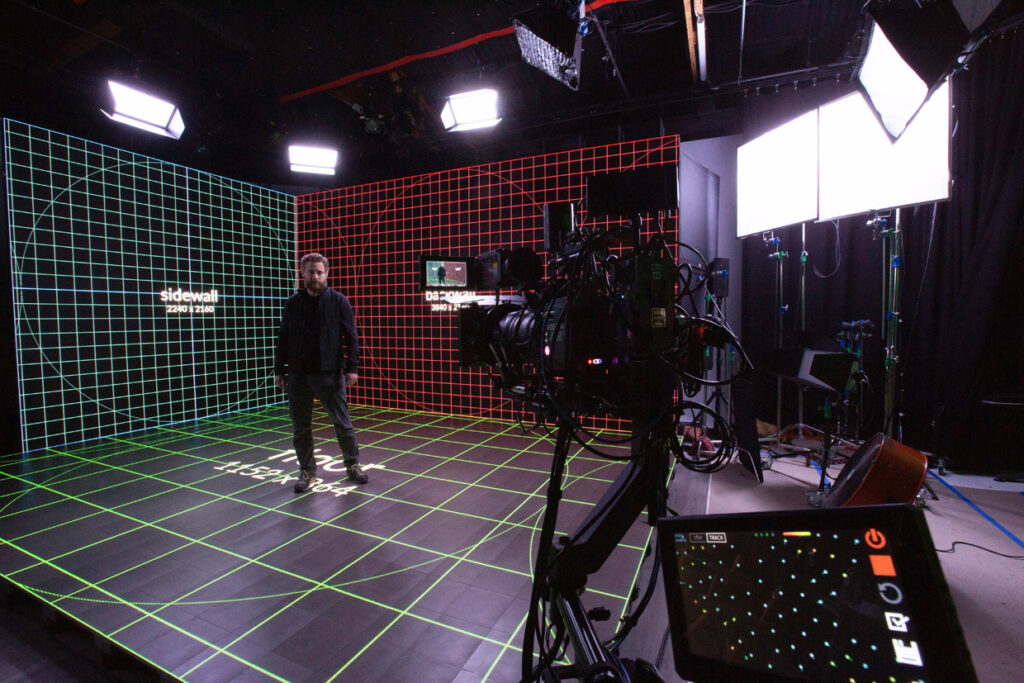

Camera tracking systems continue to evolve to meet the changing needs of broadcasters, helping present augmented reality graphics on-air and even create virtual worlds through XR stages.

In this installment of our Industry Insights roundtable, our experts from the field of camera tracking technology share their thoughts on trends such as XR production and advancements to look forward to.

What challenges still exist with camera tracking for broadcast production?

“In some ways, there’s a perception problem at play, where camera tracking is generally thought of as complex or requiring a steep learning curve. In the past, there was some truth to that. Most installations, regardless of provider, required an operator or someone versed in the tech. These days, we can get someone up and running in under an hour. We just saw this in Portugal, where one of our first Mk2 customers went live to air in a couple of days. So, breaking down that mental hurdle of ‘can we do this?’ should free more broadcasters up to start experimenting with the technology. Once they do, the vast majority will catch right on,” said Mike Ruddell, global director of business development for Ncam.

“Precision camera tracking is a mature technology today, but there are always improvements in tracking accuracy, higher frame rates, simpler lens calibration, and extended uses (e.g. Mo-Sys Cinematic XR Focus) that will mean it will continue to be developed and refined,” suggested Mike Grieve, commercial director for Mo-Sys.

“XR productions require very accurate camera tracking, especially for set extension. XR rentals require fast setup time, and closed XR stages do not allow traditional optical camera tracking,” answered Thorsten Mika, managing partner at TrackMen.

What are common concerns or questions you’re hearing from customers?

“There are a lot of concerns that investing in a tracking solution will limit or restrict how they work. For example, will choosing Ncam restrict the graphics platform they use, their camera rig selections or the environment they want to shoot in? The answer is no, but what’s behind that is this desire for flexibility. New tech should work with legacy tech, so companies get a seamless transition as they begin to broaden out their virtual graphics. But at the same time, broadcasters also want technology to open their productions up to something new. So when they start assessing tracking options and are thinking about what’s going to get them there—fixed cameras (indoor-only) or hybrid cameras (inside and out)—most times they choose the latter for flexibility. They don’t want to be limited. They want something that will work just as well in a newsroom as it does a snowy field, because then they can tell whatever story they want,” Ruddell explained.

“For green screens, the questions tend to be about how to create ever more realistic blending between the virtual world (Unreal Engine graphics) and the real world (the studio space and talent). This encompasses set extensions, lighting matching, ray tracing etc. For LED walls, because this technology and its workflow are so different, the questions are about configuration, set up, and general operational questions such as, ‘How do I avoid moiré?'” said Grieve.

What workflow enhancements do you see coming to camera tracking?

“I think we are moving to a ‘plug & play’ solution that is totally de-skilled. That’s one of the reasons we invested in a Web UI. Every year, the tech has to be more approachable. This is especially true when you’re talking about how it moves from the national networks and sports channels into the local news affiliates and minor leagues. They want state-of-the-art graphics too, but don’t have the same institutional resources. If camera tracking can become plug & play, you make real-time graphics more accessible up and down the chain. So that’s where we’re going,” predicted Ruddell.

“We see camera tracking being more deeply integrated into both production and post-production workflows. We are now integrating our tracking system StarTracker directly to cameras to extract complete datasets to aid post-production compositing. We’re also utilizing the cloud to run parallel virtual production workflows to improve real-time graphics quality in a final pixel shoot. So the enhancements are coming around camera tracking, rather than in camera tracking itself,” Grieve explained.

“Tracking should become more affordable; this is achievable through faster setup times, less effort in installing markers, and more flexibility for different setups,” Mika said.

What advancements or updates are coming to your products this year?

“Alongside the ongoing evolution of the products, I’m really looking forward to the release of our Mk2 connection box. Listening to feedback we understand that mounting our Mk2 server to the rig, even though it’s incredibly small and light, is not always ideal. The connection box will allow it to be located anywhere on the network, and further reduce size and weight of hardware on the camera,” said Ruddell.

“Honestly, too many to mention! Mo-Sys is developing multiple virtual production technologies to improve LED volume workflows, to reinvigorate green/blue screen workflows, and to bring to market the next generation of robotic virtual production solutions. The next 12 months are going to be busy,” Grieve responded.

“We just released a brand new tracking system called GhosTrack which uses a hidden reference image at an LED screen. The patented technology allows us to hide this image for the human eye and for the broadcast camera, but make it visible for our tracking system only. The result is an easy to use but very precise tracking. It is super quick to install and works where traditional tracking technologies fail,” said Mika.

How has the move to virtual events and XR production changed your business?

“Remote and virtual events were gradually evolving pre-pandemic, but the events of the last year have been a huge catalyst in their evolution. For our business, this has seen demand soar as producers look to add augmented elements to make up for the lack of audience and atmosphere. It remains to be seen to what extent live events retain these operational models when life returns to normal; however, I suspect with the cost, operational and environmental benefits virtual events will still have a part to play,” Ruddell said.

“We have never been busier! Double digit growth last year, and the same forecast for this year, has meant that recruitment has suddenly become our bottleneck… well, that and GPU cards thanks to BitCoin entrepreneurs! We’re incredibly fortunate that our product and solution portfolio directly maps into the demand for virtual events, remote events, and XR. In addition, our rate of innovation means that we’re frequently in the headlines, and that has helped drive new business to us,” said Grieve.

“Significant growth, new clients who never thought about AR or XR. Therefore it is mandatory that the technology becomes easy to use for non-experts,” Mika told us.

Participants

Mike Ruddell, Ncam

Mike Grieve, Mo-Sys

Thorsten Mika, TrackMen

Subscribe to NCS for the latest news, project case studies and product announcements in broadcast technology, creative design and engineering delivered to your inbox.

tags

Camera Tracking, Extended Reality, LED Volumes, Mike Grieve, Mike Ruddell, Mo-Sys, Mo-Sys Cinematic XR Focus, Mo-Sys StarTracker, Ncam, Ncam Mk2, Thorsten Mika, TrackMen, XR Studio

categories

3D, Augmented Reality, Virtual Production and Virtual Sets, Broadcast Equipment, Camera Control & Camera Robotics, Camera Tracking, Cameras, Industry Insights, Voices